The Gist

This blog post breaks down a real-world machine learning challenge: selecting the most relevant clinical documents from large patient encounters to support prior authorization. It explains how the team framed document selection as a relevance and ranking problem, why recall was prioritized over precision, and how a multi-stage pipeline — combining deduplication, lightweight filtering, and fine-tuned language models — improved reviewer efficiency. This piece also shares concrete evaluation methods and unexpected findings from comparing custom-trained models to GPT-based approaches, including why newer models didn’t necessarily perform better.

This post looks back at one of our earlier approaches to automated document selection for prior authorization.

When we first set out to solve this problem, our objective was to develop a reliable system that would have the most significant impact on saving time for physicians and hospital staff. At the time, the latest available OpenAI model was GPT-4, which we could compare against. What follows is a snapshot of that stage of the work that helped us reason about relevance in a practical, production setting.

What Is Prior Authorization and How Did We Approach It?

Prior authorization is a routine part of healthcare operations used by health insurers to review certain medical procedures before they are performed.

For hospital staff (e.g., doctors, nurses, prior authorization teams), this means submitting clinical documentation that demonstrates why a requested procedure is medically necessary for a particular patient. Approval is often required before a procedure can be scheduled or reimbursed, and the outcome of the review determines whether care can proceed as planned or must be delayed, revised, or appealed.

In practice, the responsibility for navigating this process falls largely on health systems and their staff. Physicians and their teams spend an estimated 13 hours per week completing prior authorizations — much of that time devoted to locating and assembling the appropriate documentation.

Although the intent is straightforward, the practical workflow often becomes inefficient. A single patient encounter can generate dozens to hundreds of clinical documents across various categories, including clinical notes, lab results, imaging reports, progress notes, discharge summaries, and more.

Only a small fraction of these documents are typically relevant to the authorization request, yet staff must find a way to sift through the entire set to determine which materials should be submitted. If the correct documents are not submitted, the request may be denied or returned for additional information, introducing avoidable delays for both healthcare providers and patients.

It is not surprising then that 89% of physicians report that prior authorization contributes to burnout, and that 29% say it has led to a serious adverse patient outcome, according to the American Medical Association.

For hospitals and health systems, the challenge is that document relevance is not determined solely by document type or a small set of keywords. It depends on how the clinical details contained in a document relate to the specific procedure being requested and the associated service line. Important information may appear in unexpected places, and documents with similar titles can vary substantially in their clinical significance. As a result, identifying the appropriate documents becomes a careful, time-consuming task that requires contextual understanding.

The approach described here treated this as a relevance classification problem and used a structured, multi-stage system to narrow the search space and apply deeper contextual evaluation.

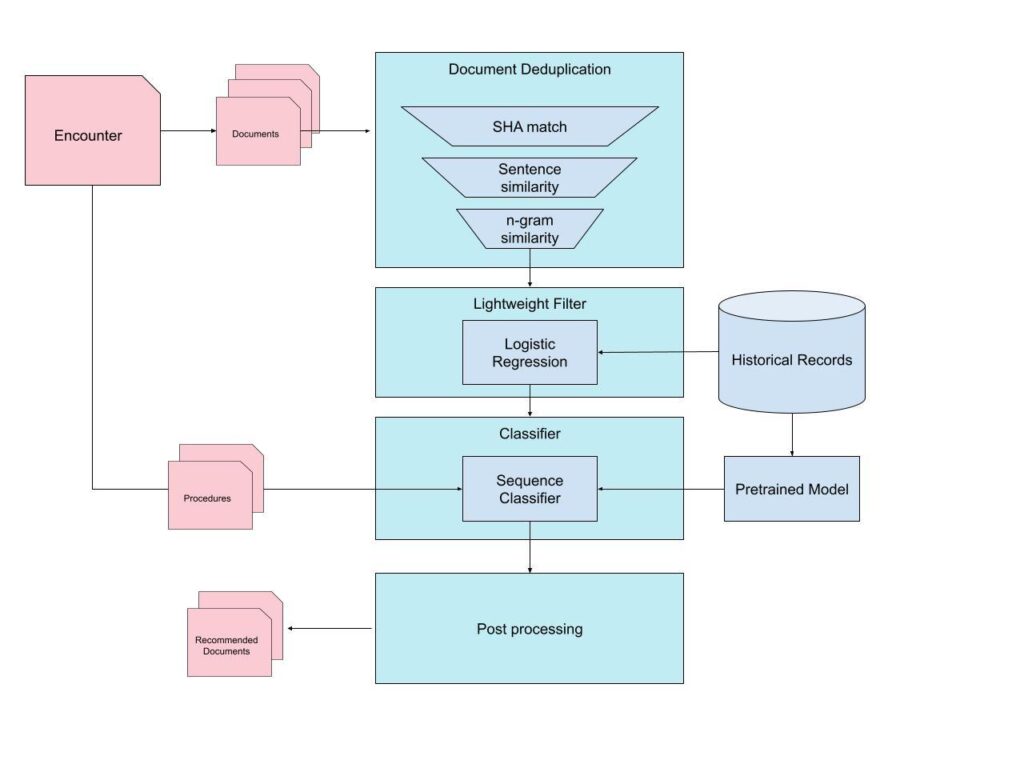

The pipeline combined deduplication, metadata-based filtering, and a fine-tuned language model trained to assess procedural relevance. Although our current implementation has evolved since this point, the ideas and modeling lessons from this phase were important in shaping how we think about the problem today. The full system from that early iteration is illustrated below.

Pre-Filtering

Deduplication

Since some encounters can have many hundreds of documents, it is helpful to narrow down the candidate documents by removing redundancies. Health records frequently have duplicates or near duplicates. This occurs for various reasons, including auto-generated notes, repeated PDF exports, or extracts that differ only in formatting. Therefore, the first stage of filtering involved applying deduplication.

Our deduplication pipeline operated in multiple layers:

Exact-match detection via hashing

The system computed content-level hashes (e.g., SHA-based fingerprints) for each document’s extracted text representation. Identical hashes correspond to exact duplicates and can be removed immediately. This rapid operation eliminated a significant number of redundant files at minimal cost.

Semantic similarity for near-duplicates

Exact matching alone is insufficient because many “duplicates” differ by minor formatting or template variations. To address these cases, we applied sentence-level similarity to detect documents that share clinical content despite superficial differences. However, sentence-level comparisons will commonly fail due to OCR-generated documents or template variations.

As a fallback, we applied n-gram-based similarity. This two-stage semantic approach captured both clear and subtle duplicates, resulting in a smaller, cleaner set of candidate documents.

Irrelevant Document Classification

The next stage of filtering was a lightweight classifier to eliminate documents that are unlikely to be helpful for authorization.

The goal here was not to determine relevance for a specific procedure, but simply to discard obviously irrelevant content before invoking a larger, more expensive model. We used a Logistic Regression classifier because it’s fast, inexpensive to run, and interpretable. Other lightweight models (Naive Bayes, linear SVMs, MLPs) were also viable, but Logistic Regression provided the best balance of speed, stability, and performance in our experiments.

So why not use this approach for the whole problem?

Understanding whether a document is relevant to a specific medical procedure requires deeper semantic reasoning, contextual understanding, and pattern recognition than classical ML can provide. The lightweight model was intended purely to remove documents that are clearly out of scope.

We found the most effective features to train on were basic metadata rather than the raw text:

Document age

Document category (note, imaging, lab, etc.)

Document type (progress note, clinical summary, etc.)

Service line (cardiology, gastrointestinal, orthopedics, etc.)

This model intentionally excluded the procedure itself. Procedure codes are extremely sparse, and incorporating them added complexity without improving performance.

The model’s performance is shown below:

Class | Precision | Recall |

|---|---|---|

Irrelevant | 0.996 | 0.533 |

Relevant | 0.070 | 0.941 |

The design goal of this stage was to remove obviously irrelevant documents with very high precision, even at the cost of lower recall. In practice, this approach successfully filtered out roughly half of all irrelevant documents while maintaining a precision of greater than 99%, ensuring that nearly all documents removed at this step were truly out of scope.

Document Selection

After achieving a reduced candidate set, the next step was the main challenge: determining which documents truly support the medical necessity of the requested procedure.

This requires an understanding of clinical reasoning, procedure-specific justification patterns, and implicit cues within physician notes. This problem lends itself well to a fine-tuned Llama-based sequence classifier.

We began with a Llama architecture and continued pretraining on a large corpus of medical records. This step helped the model understand clinical syntax and terminology, recognize common documentation structures, and improve downstream classification performance.

The model was then fine-tuned on the document-selection dataset as a binary classifier. Each example is constructed as a structured prompt:

[DOCUMENT TEXT]

—

Document Type: [INSERT DOC TYPE]

Service Line: [INSERT SERVICE LINE]

Procedure Requested: [INSERT PROCEDURE]

Procedure Date: [INSERT DATE]

Document Age (days): [INSERT AGE]

Historical reviewer behavior provides labels:

0 = NEGATIVE (not selected)

1 = POSITIVE (selected)

One challenge was that historical labels typically indicate that a document was selected, but not for which procedure it was selected. Because authorization requests often involve multiple related procedures (e.g., several cardiac ablation codes), we generated one training example per procedure. This effectively treated each (document × procedure) pair as its own relevance decision, allowing the model to learn fine-grained associations.

During inference, the model produced a logit score representing the predicted relevance for each document x procedure pair. The confidence was adjusted by an “age factor” based on the document’s age. Older documents are less likely to be relevant. Documents that pass a predetermined threshold were then ranked by their max confidence and returned to reviewers in descending order.

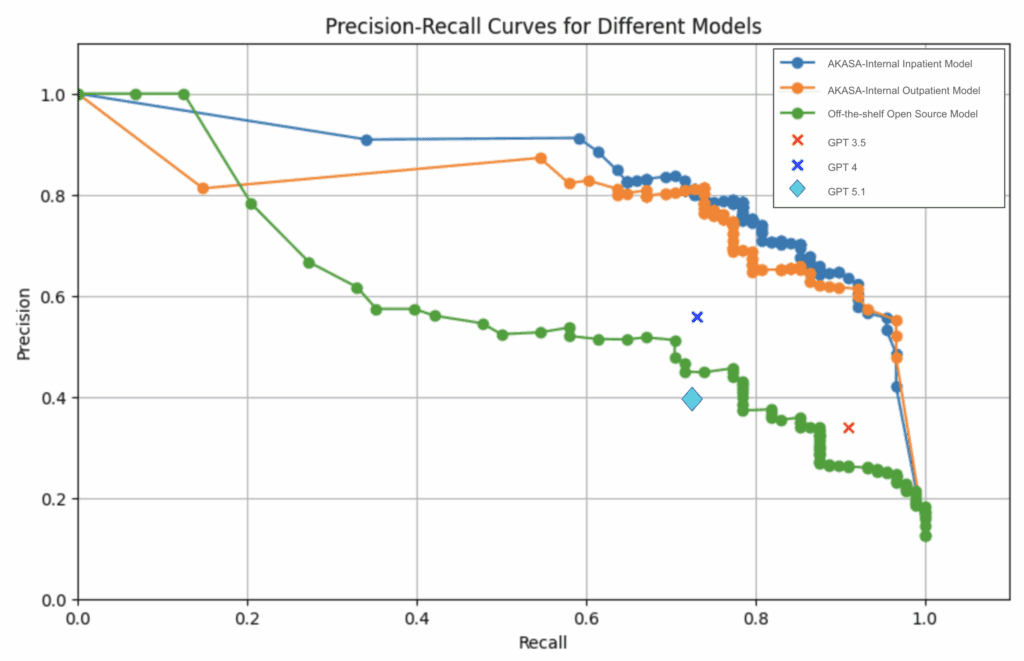

The above precision-recall curve compares our in-house models (labeled AKASA-Internal Inpatient Model and AKASA-Internal Outpatient Model) against GPT (GPT 3.5, 4, and 5.1) and an off-the-shelf model (labeled Off-the-shelf Open Source Model). Because the comparison models were not fine-tuned on our dataset, we iterated on custom prompts to ensure each approach was evaluated at its best possible performance.

One key finding we discovered was that the off-the-shelf model significantly underperformed compared to our in-house models. This is likely due to our models being trained with domain-specific knowledge, which allows for better understanding of medical record structures and terminology.

At the time of implementation, GPT-4 was the latest model available. Although we were able to get these models to outperform an off-the-shelf model, it lacked the performance we were hoping to see. There was also a lack of a confidence score associated with predictions by the GPT models that limited our ability to prioritize a higher recall.

Now that GPT-5.1 is available, we revisited our implementation for a new comparison point. Surprisingly, we found that the newer GPT-5.1 performed worse than GPT-4 in both precision and recall. One possible explanation is that the newer model requires a different approach in its prompt engineering, and applying the old methodologies (with minor adjustments) is insufficient to showcase its effectiveness.

Evaluation Methodology

Precision and recall

The most straightforward way to evaluate a binary classifier is with precision and recall.

We evaluated our model with Precision@TopK and Recall@TopK where K=1, 5, 10. Obviously, the best option is to have both high precision and high recall, but in the real world, we often have to choose which to optimize for. In the task of document selection, recall was deemed more important than precision. A reviewer can quickly discard an irrelevant document, but missing a relevant document may force them to manually search through the full encounter.

Ranking performance

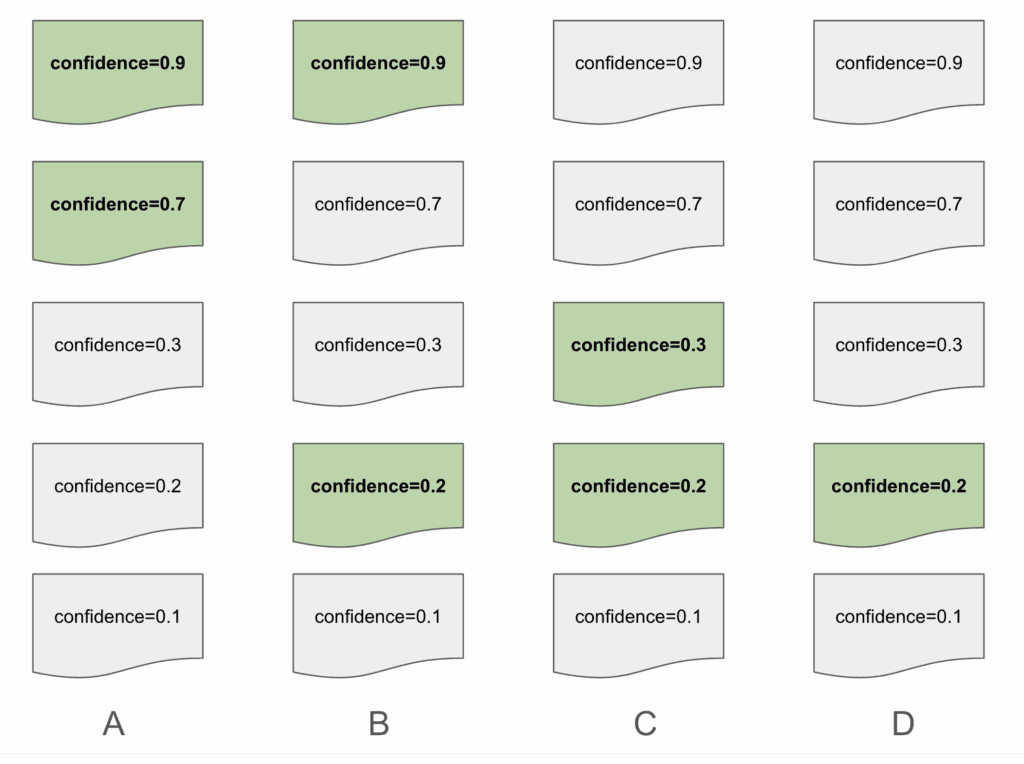

From a modeling perspective, precision and recall are great metrics. But they don’t fully capture the user experience. Reviewers strongly prefer and expect relevant documents to appear near the top of the list. To capture this, we used a rank score defined as follows:

Rank Score = (# of documents selected) / (index of lowest-ranked selected document)

The above is an example of how a list of documents may be presented. The highlighted documents represent a correct “relevant” document, as selected by a human reviewer. Suggestion A has a rank score of 1 because it only has suggestions in the first slots. Suggestion B and C both share a rank score of 0.5. Despite Suggestion B having a correctly predicted document in the first slot, we want to ensure that we are penalizing predictions with relevant documents that were low on the list. Suggestion D results in a rank score of 0.25, with the lowest score of the group due to how far down the list its only suggestions are.

This allowed us to evaluate not only whether the model identifies the correct documents, but also whether it presents them in a position that meaningfully enhances reviewer efficiency, which is ultimately the goal of the ranking stage.

Key Modeling and Evaluation Lessons From Document Selection

Looking back, this pipeline played an important role in defining how we approach document selection as a modeling problem. The work highlighted where lightweight filtering was sufficient, where contextual signals mattered most, and how to evaluate performance in a way that reflected the reviewer experience rather than just raw classification metrics.

The lessons from this phase influenced how we think about model architecture, how we represent clinical information, and how we shape relevance decisions around procedural context.

Over time, those insights led to multiple stages of refinement. While the solution here is an earlier stage in that evolution, it continues to influence the modeling strategies and problem framing that underlie our current system, and represents a key point in the learning process that continues to drive our development efforts forward.

While human review remains an important part of prior authorization, the system has meaningfully reduced the manual effort involved and supported a more consistent and efficient workflow for hospital teams.

AKASA

Jan 9, 2026

AKASA is the preeminent provider of generative AI solutions for the healthcare revenue cycle.